Introduction:

Diffusion models have emerged as a powerful technique for generating complex, high-dimensional outputs, such as images or protein structures. These models are typically trained to match training data, but there is a growing interest in training them to achieve specific goals. In this blog, we will explore how the combination of GPT-4, a large multimodal model, and Stable Diffusion can enhance prompt understanding in text-to-image diffusion models.

The Power of Diffusion Models:

Diffusion models have gained popularity due to their ability to transform random noise into realistic samples. By training these models on large datasets, they can generate high-quality images or other outputs that closely resemble the training data. However, the objective of training diffusion models is not always to match the training data. In many applications, the goal is to achieve a specific outcome or fulfill a particular prompt.

Introducing GPT-4:

GPT-4, developed by OpenAI, is a large multimodal model that can accept both image and text inputs and emit text outputs. While GPT-4 is less capable than humans in many real-world tasks, it has shown remarkable performance in generating creative and coherent text. By combining GPT-4’s language understanding capabilities with Stable Diffusion, we can enhance the prompt understanding of text-to-image diffusion models.

Stable Diffusion and Denoising Diffusion Policy Optimization:

Stable Diffusion is a technique used to train diffusion models on downstream objectives. It reframes the diffusion process as a multi-step Markov decision process and leverages reinforcement learning (RL) algorithms to maximize the reward of the final sample. In the context of text-to-image diffusion models, the goal is to generate images that align with a given prompt or textual description.

To achieve this, denoising diffusion policy optimization (DDPO) is applied. DDPO is a variant of Stable Diffusion that introduces RL algorithms to optimize the diffusion process. Two specific variants of DDPO, DDPO SF and DDPO IS, have been evaluated on different reward functions, with DDPO IS performing the best. This demonstrates the effectiveness of DDPO in finetuning Stable Diffusion for tasks such as image compressibility.

Enhancing Prompt Understanding:

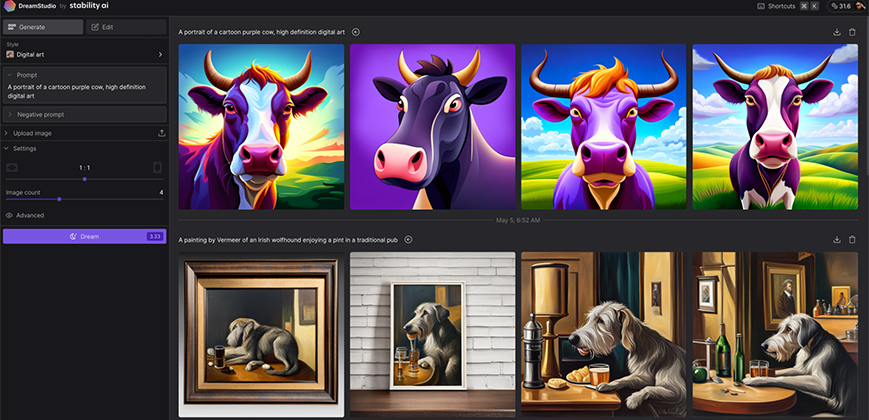

By combining GPT-4’s language understanding capabilities with Stable Diffusion and DDPO, we can enhance the prompt understanding of text-to-image diffusion models. GPT-4 can process textual prompts and generate corresponding images by leveraging the training it has received on large datasets. This integration allows the diffusion models to generate image samples that align more closely with the provided prompts, resulting in more accurate and relevant outputs.

Applications and Future Implications:

The enhanced prompt understanding of text-to-image diffusion models has various applications across different fields. It can be utilized in creative endeavors such as generating artwork based on textual descriptions or aiding in the design process by transforming rough sketches into detailed visual representations. Additionally, it can be used in industries like advertising and e-commerce to generate product images based on textual descriptions or user preferences.

However, the integration of GPT-4 and Stable Diffusion also raises important ethical considerations. The enhanced capabilities of these models can lead to potential misuse or misinformation if not properly regulated. It is crucial to ensure that the generated outputs align with ethical guidelines and avoid biases or harmful content.

Conclusion:

The combination of GPT-4 and Stable Diffusion presents an exciting opportunity to enhance the prompt understanding of text-to-image diffusion models. By leveraging GPT-4’s language understanding capabilities and applying DDPO, we can generate image samples that align more accurately with textual prompts. This advancement has numerous applications across various industries, but it also requires thoughtful consideration of ethical implications. As we continue to explore the possibilities of this integration, it is essential to prioritize responsible development and usage to harness the full potential of these powerful technologies.